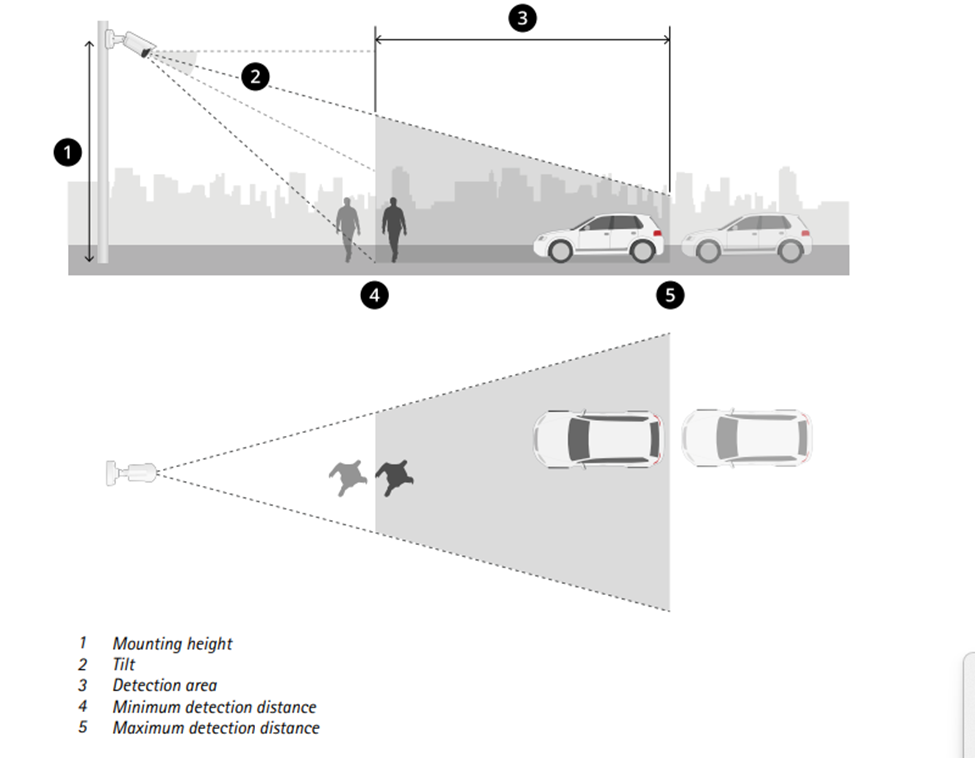

Camera placement

It is important that the camera is placed appropriately for optimal performance. This involves distance from the camera to the scene and the camera’s angle to the area of interest.

Relation between height and distance

The optimal performance of the camera depends on both the height and distance it has to objects it is to have in view.

Minimum distance = Camera height / 2

For example: If camera height is 2 meters, objects should be at least 1 meter from the camera.

Illustration of height versus detection distances

How a camera understands the scene

Humans are able to recognize what is happening in a scene with an understanding of depth and scale. Cameras on the other hand, mostly do not have any depth perception and are therefore not able to differentiate objects, with different distances from the camera. For example, the camera identifies movements in a bed by looking directly at the bed and will generate a false alarm if a person walks in front of the bed.

The camera is not be able to differentiate between movements generated by a person standing in the line-of-sight (between the bed and the camera), and movements generated by a person lying in the bed.

In Object Analytics, a scenario can be set to detect motions or objects (Persons). The huge advantage of object based detection is that motions by themselves will NOT generate an alarm, unlike motion based detection which may generate a false alarm, since the camera does not have any perception of depth.

The scene

Let’s look at a common scene: a person walking past their bed. Motion based and AI-based understanding of this scene is illustrated and described below.

Person is walking in front of bed

Person has moved past the bed

How motion based detection analyzes the scene above

The motion based detection analyzes the scene on a flat canvas with no understanding of depth. The person moving into the front of the bed (into the line-of-sight) will therefore generate the motion based alarm in the bed area.

Person moving in front of the bed will cause the motion detection

to generate a false alarm.

How AI-based detection analyzes the scene above

The AI-based detection also analyzes the scene on a flat canvas with no understanding of depth, but it generates alarms based on detected humans in the scene. The triggering point of the person is at the lower part of the box (where the feet are assumed to be), and will not be within the bed area.

The person moving into the front of the bed will therefore not generate an alarm.

Person moving in front of the bed will not cause the AI-based detection

to generate a false alarm, since point-of-detection is not within bed area.

Your requirement affects the camera placement

The use case for event-based supervision must be clearly defined because this dictates the optimal placement for the camera. A good placement ensures that the camera’s algorithm has good conditions to detect humans in the video stream.

The room setup should be documented prior to installation, so that optimal placement of the camera can be decided. For example to detect personal behavior around the bed, the camera must be placed with optimal view-of-sight of the bed.

The angle of the camera is important

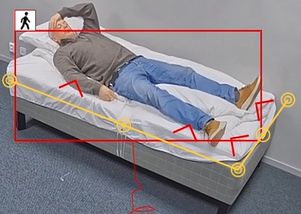

A person, once recognized by the algorithm, is indicated in Object Analytics with a bounding box. The camera angle is important since this affects the person’s proportion in relationship to the scene.

Obscured areas may cause the camera to lose track of the person and therefor the mask (the yellow lines in the videos and screenshots below), may not be triggered. This is most problematic at night when the camera is using IR (Infra red) and the contrast is lower due to the gray-toned video. This effect must be taken into account when setting up the masks.

Falling out of bed is not detected since the floor area is partly covered by the bed resulting in that the camera losing the tracking of the person.

Falling out of bed is detected since there are no covered areas and the person is tracked when leaving the bed.

The scenario will not trig since the bounding box is still partly outside of the defined line.

The bounding box has crossed the line and the event will be triggered.